项目-泰山派多媒体系统

2025/12/26大约 8 分钟

项目-泰山派多媒体系统

基于这个项目二次开发的,目前跑通了环境,下位机可以推流、上位机可以拉流

源码目录:C:\Users\Shelton\Workspaces\code\projects\media_sys

- 上位机

- 拉流rtsp

- 下位机

- 设备树

- ov5695

- 网口

- mpp 捕获摄像头

- ZLMediaKit 推流rtsp

- 设备树

- 计划

- 上位机可以通过json传递圈画坐标给下位机,实时同步到各个上位机程序

参考资料

- 文档

- rk论坛

- 易百纳-瑞芯微

- Firefly开源社区

编译环境

nfs

【 泰山派RK3566 | NFS】使用泰山派RK3566进行 NFS 网络文件挂载_rk3566 nfs-CSDN博客

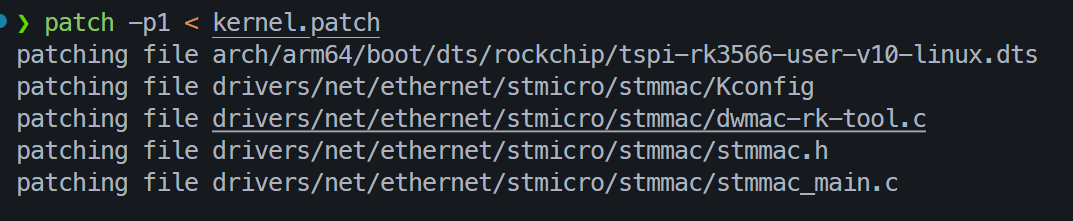

SDK打补丁

patch -p1 < kernel.patch

固件打包脚本

#! /etc/bin

export RK_ROOTFS_SYSTEM=buildroot

sudo ./build.sh all

./mkfirmware.sh

sudo ./build.sh updateimg

cp rockdev/update.img /mnt/hgfs/Desktop/rootfs目录

/home/shelton/Workspaces/media-sys/info/tspi_linux_sdk_repo_20240131/buildroot/output/rockchip_rk3566/target摄像头设备树

/home/shelton/Workspaces/media-sys/info/tspi_linux_sdk_repo_20240131/kernel/arch/arm64/boot/dts/rockchip/tspi-rk3566-csi-v10.dtsi摄像头驱动源码

/home/shelton/Workspaces/media-sys/info/tspi_linux_sdk_repo_20240131/kernel/drivers/media/i2c/ov5695.cnfs目录

sudo vi /etc/exports

sudo service nfs-kernel-server restart上位机

这个是用qt写的,主要用了xvideoview这个库,里面对ffmpeg和sdl进行了浅封装,之后应该会进行深度封装,并使用责任链模式组织代码

下位机源码编译

cmake交叉编译工具路径 #todo

# Example toolchain.cmake content

set(CMAKE_SYSTEM_NAME Linux)

set(CMAKE_SYSTEM_PROCESSOR aarch64)

# 交叉编译器地址

set(CMAKE_C_COMPILER "/home/shelton/Workspaces/media-sys/info/tspi_linux_sdk_repo_20240131/buildroot/output/rockchip_rk3566/host/bin/aarch64-buildroot-linux-gnu-gcc") # 自行修改

set(CMAKE_CXX_COMPILER "/home/shelton/Workspaces/media-sys/info/tspi_linux_sdk_repo_20240131/buildroot/output/rockchip_rk3566/host/bin/aarch64-buildroot-linux-gnu-g++") # 自行修改

#set(CMAKE_C_COMPILER "/home/shelton/Workspaces/media-sys/info/tspi_linux_sdk_repo_20240131/prebuilts/gcc/linux-x86/aarch64/gcc-buildroot-9.3.0-2020.03-x86_64_aarch64-rockchip-linux-gnu/bin/aarch64-buildroot-linux-gnu-gcc") # 自行修改

#set(CMAKE_CXX_COMPILER "/home/shelton/Workspaces/media-sys/info/tspi_linux_sdk_repo_20240131/prebuilts/gcc/linux-x86/aarch64/gcc-buildroot-9.3.0-2020.03-x86_64_aarch64-rockchip-linux-gnu/bin/aarch64-buildroot-linux-gnu-g++") # 自行修改

# 设置目标系统根目录

set(CMAKE_FIND_ROOT_PATH /home/shelton/Workspaces/media-sys/info/tspi_linux_sdk_repo_20240131/buildroot/output/rockchip_rk3568/target/) # 自行修改

# 配置 CMake 查找程序和库文件的方式

set(CMAKE_FIND_ROOT_PATH_MODE_PROGRAM NEVER)

set(CMAKE_FIND_ROOT_PATH_MODE_LIBRARY ONLY)

set(CMAKE_FIND_ROOT_PATH_MODE_INCLUDE ONLY)相机源码编译

# 修改链接库顺序,使用以下替代方案

target_link_libraries(rk_cmake_project

pthread

rockit

rt_test_comm

opencv_core

opencv_imgproc

opencv_imgcodecs

# 去掉 opencv_highgui 或者使用以下替代

# opencv_highgui # 注释掉这一行

rknnrt

rga

turbojpeg

mk_api

)

# 或者直接不链接 opencv_highgui(如果不需要 GUI 显示)也可以用下面这个qt版本,但我去掉qt的相关库直接就能在板子上跑了

# 首先添加 Qt5 相关的查找模块(需要在交叉编译环境中安装 Qt5 开发包)

find_package(Qt5 COMPONENTS Widgets REQUIRED)

# 然后修改链接库

target_link_libraries(rk_cmake_project

pthread

rockit

rt_test_comm

opencv_core

opencv_highgui

opencv_imgproc

opencv_imgcodecs

rknnrt

rga

turbojpeg

mk_api

Qt5::Widgets # 添加 Qt5 Widgets 库

)板端配置

库目录 vi /etc/profile

export LD_LIBRARY_PATH="$LD_LIBRARY_PATH:/nfs/lib:/lib/rk_lib"启动脚本 vi /etc/init.d/rcS, 挂载nfs

【 泰山派RK3566 | NFS】使用泰山派RK3566进行 NFS 网络文件挂载_泰山派开发板rk3566打包update.img-CSDN博客

#!/bin/sh

ifconfig eth0 up

ifconfig eth0 192.168.1.150 netmask 255.255.255.0

mount -t nfs -o nfsvers=3,nolock 192.168.1.200:/home/shelton/Workspaces/nfs_root /nfs

# Start all init scripts in /etc/init.d

# executing them in numerical order.

#

for i in /etc/init.d/S??* ;do

# Ignore dangling symlinks (if any).

[ ! -f "$i" ] && continue

case "$i" in

*.sh)

# Source shell script for speed.

(

trap - INT QUIT TSTP

set start

. $i

)

;;

*)

# No sh extension, so fork subprocess.

$i start

;;

esac

done设备树修改

OV5695的2 Lane配置(最大支持2路数据传输)

源代码

main

#include <iostream>

#include <thread>

#include <opencv2/opencv.hpp>

#include <opencv/cv.hpp>

#include "yolov5.h"

#include "image_utils.h"

#include "file_utils.h"

#include "image_drawing.h"

#include "mk_mediakit.h"

#include "ThreadPool.h"

#include "rk_mpi.h"

// rknn 相关变量

rknn_app_context_t rknn_app_ctx;

const char *model_path = "/root/rknn_yolov5_demo/model/yolov5s_relu.rknn";

// mpi 相关变量

int width = DISP_WIDTH;

int height = DISP_HEIGHT;

VENC_STREAM_S stFrame; // 编码码流结构体,用于存储读取到的编码流

VIDEO_FRAME_INFO_S h264_frame; // 编码帧信息结构体

unsigned char *data = nullptr; // 指向缓存块的指针

// 用于fps显示

char fps_text[16];

float fps = 0;

// 线程池

ThreadPool rknnPool(2);

ThreadPool h264encPool(1);

// ZLMediaKit 媒体变量

mk_media media;

/*********************************************

* h264编码任务,包含rtsp推流

* 1.给图像添加fps信息

* 2.发送视频帧给VENC进行编码

* 3.读取编码结果并进行推流

*********************************************/

void encode_task(cv::Mat frame) {

sprintf(fps_text, "fps = %.2f", fps);

cv::putText(frame, fps_text,

cv::Point(40, 40),

cv::FONT_HERSHEY_SIMPLEX,1,

cv::Scalar(0,255,0),2);

memcpy(data, frame.data, width * height * 3);

/**************************

* 向VENC发送原始图像进行编码

* 0为编码通道号

* h264_frame 为原始图像信息

* -1 表示阻塞,发送成功后释放

**************************/

RK_MPI_VENC_SendFrame(0, &h264_frame, -1);

/**************************

* 获取编码码流

* 0为编码通道号

* stFrame为码流结构体指针

* -1 表示阻塞,获取编码流后释放

**************************/

RK_S32 s32Ret = RK_MPI_VENC_GetStream(0, &stFrame, -1);

if(s32Ret == RK_SUCCESS) {

void *pData = RK_MPI_MB_Handle2VirAddr(stFrame.pstPack->pMbBlk);

uint32_t len = stFrame.pstPack->u32Len;

// 推流

static int64_t time_last = 0;

// 获取当前时间点

auto now = std::chrono::system_clock::now();

// 转换为自1970年1月1日以来的毫秒数

auto duration = std::chrono::duration_cast<std::chrono::milliseconds>(now.time_since_epoch());

// 获取毫秒级时间戳

int64_t timestamp = duration.count();

int64_t fps_time = timestamp - time_last;

fps = 1000 / fps_time;

time_last = timestamp;

mk_frame frame = mk_frame_create(MKCodecH264, timestamp, timestamp, (char*)pData, (size_t)len, NULL, NULL);

mk_media_input_frame(media, frame);

mk_frame_unref(frame);

}

s32Ret = RK_MPI_VENC_ReleaseStream(0, &stFrame);

if (s32Ret != RK_SUCCESS) {

RK_LOGE("RK_MPI_VENC_ReleaseStream fail %x", s32Ret);

}

}

/*********************************************

* rknn推理任务

* 1.执行yolo5推理并画框

* 2.转为Mat类型,利用opencv将NV12格式转为RGB888

* 3.转换后的Mat类型帧,提交给encode_task

*********************************************/

void rknn_task(image_buffer_t src_image) {

// 执行推理

object_detect_result_list od_results;

int ret = inference_yolov5_model(&rknn_app_ctx, &src_image, &od_results);

if (ret != 0)

{

printf("inference_yolov5_model fail! ret=%d\n", ret);

}

// 画框和概率

char text[256];

for (int i = 0; i < od_results.count; i++)

{

object_detect_result *det_result = &(od_results.results[i]);

printf("%s @ (%d %d %d %d) %.3f\n", coco_cls_to_name(det_result->cls_id),

det_result->box.left, det_result->box.top,

det_result->box.right, det_result->box.bottom,

det_result->prop);

int x1 = det_result->box.left;

int y1 = det_result->box.top;

int x2 = det_result->box.right;

int y2 = det_result->box.bottom;

draw_rectangle(&src_image, x1, y1, x2 - x1, y2 - y1, COLOR_BLUE, 3);

sprintf(text, "%s %.1f%%", coco_cls_to_name(det_result->cls_id), det_result->prop * 100);

draw_text(&src_image, text, x1, y1 - 20, COLOR_RED, 10);

}

// 转换为RGB888格式

cv::Mat yuv420sp(height + height / 2, width, CV_8UC1, src_image.virt_addr);

cv::Mat frame(height, width, CV_8UC3);

cv::cvtColor(yuv420sp, frame, cv::COLOR_YUV420sp2BGR); // COLOR_YUV2RGB_NV12

h264encPool.enqueue(encode_task, frame);

// encode_task(frame);

}

/*************************************

* 视频捕获任务

* 1.捕获视频帧以VIDEO_FRAME_INFO_S类型存储

* 2.转换为image_buffer_t类型用于rknn推理

*************************************/

void capture_task() {

// 视频图像帧信息结构体,存储采集视频帧

VIDEO_FRAME_INFO_S stViFrame;

// get vi frame

RK_S32 s32Ret = RK_MPI_VI_GetChnFrame(0, 0, &stViFrame, -1);

if(s32Ret == RK_SUCCESS) {

image_buffer_t src_image;

src_image.width = stViFrame.stVFrame.u32Width;

src_image.height = stViFrame.stVFrame.u32Height;

src_image.format = IMAGE_FORMAT_YUV420SP_NV12;

src_image.virt_addr = reinterpret_cast<unsigned char*>(RK_MPI_MB_Handle2VirAddr(stViFrame.stVFrame.pMbBlk));

src_image.size = RK_MPI_MB_GetSize(stViFrame.stVFrame.pMbBlk);

src_image.fd = RK_MPI_MB_Handle2Fd(stViFrame.stVFrame.pMbBlk);

rknnPool.enqueue(rknn_task, src_image);

// rknn_task(src_image);

}

s32Ret = RK_MPI_VI_ReleaseChnFrame(0, 0, &stViFrame);

if (s32Ret != RK_SUCCESS) {

RK_LOGE("RK_MPI_VI_ReleaseChnFrame fail %x", s32Ret);

}

}

int main(int argc, char *argv[]) {

memset(fps_text, 0, 16);

/******************************* rknn 相关初始化 *******************************/

memset(&rknn_app_ctx, 0, sizeof(rknn_app_context_t));

init_post_process();

int ret = init_yolov5_model(model_path, &rknn_app_ctx);

if (ret != 0)

{

printf("init_yolov5_model fail! ret=%d model_path=%s\n", ret, model_path);

}

/******************************* mpi 相关初始化 *******************************/

RK_S32 s32Ret = 0;

stFrame.pstPack = (VENC_PACK_S *)malloc(sizeof(VENC_PACK_S));

// 内存缓存池配置

MB_POOL_CONFIG_S PoolCfg;

memset(&PoolCfg, 0, sizeof(MB_POOL_CONFIG_S));

PoolCfg.u64MBSize = width * height * 3 ; // 缓存块大小

PoolCfg.u32MBCnt = 1; // 内存缓存池中缓存块个数

PoolCfg.enAllocType = MB_ALLOC_TYPE_DMA; // 申请内存类型

//PoolCfg.bPreAlloc = RK_FALSE;

MB_POOL src_Pool = RK_MPI_MB_CreatePool(&PoolCfg); // 创建内存缓存池

printf("Create Pool success !\n");

// 从内存缓存池中获取一个缓存块,返回该缓存块的地址

MB_BLK src_Blk = RK_MPI_MB_GetMB(src_Pool, width * height * 3, RK_TRUE);

// 配置编码帧信息

h264_frame.stVFrame.u32Width = width;

h264_frame.stVFrame.u32Height = height;

h264_frame.stVFrame.u32VirWidth = width;

h264_frame.stVFrame.u32VirHeight = height;

h264_frame.stVFrame.enPixelFormat = RK_FMT_RGB888;

h264_frame.stVFrame.u32FrameFlag = 160;

h264_frame.stVFrame.pMbBlk = src_Blk; // 将帧地址关联到缓存块

// 将缓存块地址转为虚拟地址,并创建Mat类型变量指向该地址

data = (unsigned char *)RK_MPI_MB_Handle2VirAddr(src_Blk);

// rkmpi init

if (RK_MPI_SYS_Init() != RK_SUCCESS) {

RK_LOGE("rk mpi sys init fail!");

// return -1;

}

// vi init

vi_dev_init();

vi_chn_init(0, width, height);

// venc init

RK_CODEC_ID_E enCodecType = RK_VIDEO_ID_AVC;

venc_init(0, width, height, enCodecType);

/******************************* ZLMediaKit相关初始化 *******************************/

char *ini_path = mk_util_get_exe_dir("./config.ini");

mk_config config = {

0, // thread_num

0, // log_level

LOG_CONSOLE, // log_mask

NULL, // log_file_path

0, // log_file_days

1, // ini_is_path

ini_path, // ini

1, // ssl_is_path

NULL, // ssl

NULL // ssl_pwd

};

mk_env_init(&config);

mk_free(ini_path);

// 创建rtsp服务器 8554为端口号

mk_rtsp_server_start(8554, 0);

// 监听事件

mk_events events = {

.on_mk_media_changed = NULL,

.on_mk_media_publish = NULL,

.on_mk_media_play = NULL,

.on_mk_media_not_found = NULL,

.on_mk_media_no_reader = NULL,

.on_mk_http_request = NULL,

.on_mk_http_access = NULL,

.on_mk_http_before_access = NULL,

.on_mk_rtsp_get_realm = NULL,

.on_mk_rtsp_auth = NULL,

.on_mk_record_mp4 = NULL,

.on_mk_shell_login = NULL,

.on_mk_flow_report = NULL

};

mk_events_listen(&events);

// 创建媒体源

// 对应URL:rtsp://ip:8554/live/camera

media = mk_media_create("__defaultVhost__", "live", "camera", 0, 0, 0);

// 添加视频轨道

codec_args v_args = {0};

mk_track v_track = mk_track_create(MKCodecH264, &v_args);

// 初始化媒体源的视频轨道

mk_media_init_track(media, v_track);

// 完成轨道添加

mk_media_init_complete(media);

// 释放资源

mk_track_unref(v_track);

while (1) {

// 主线程执行视频捕获任务

capture_task();

}

// 销毁资源

mk_media_release(media);

mk_stop_all_server();

// Destory MB

RK_MPI_MB_ReleaseMB(src_Blk);

// Destory Pool

RK_MPI_MB_DestroyPool(src_Pool);

RK_MPI_VI_DisableChn(0, 0);

RK_MPI_VI_DisableDev(0);

RK_MPI_VENC_StopRecvFrame(0);

RK_MPI_VENC_DestroyChn(0);

free(stFrame.pstPack);

RK_MPI_SYS_Exit();

return 0;

}rk_mpi

#include "rk_mpi.h"

int vi_dev_init() {

printf("%s\n", __func__);

int ret = 0;

int devId = 0;

int pipeId = devId;

VI_DEV_ATTR_S stDevAttr;

VI_DEV_BIND_PIPE_S stBindPipe;

memset(&stDevAttr, 0, sizeof(stDevAttr));

memset(&stBindPipe, 0, sizeof(stBindPipe));

// 0. get dev config status

ret = RK_MPI_VI_GetDevAttr(devId, &stDevAttr);

if (ret == RK_ERR_VI_NOT_CONFIG) {

// 0-1.config dev

ret = RK_MPI_VI_SetDevAttr(devId, &stDevAttr);

if (ret != RK_SUCCESS) {

printf("RK_MPI_VI_SetDevAttr %x\n", ret);

return -1;

}

} else {

printf("RK_MPI_VI_SetDevAttr already\n");

}

// 1.get dev enable status

ret = RK_MPI_VI_GetDevIsEnable(devId);

if (ret != RK_SUCCESS) {

// 1-2.enable dev

ret = RK_MPI_VI_EnableDev(devId);

if (ret != RK_SUCCESS) {

printf("RK_MPI_VI_EnableDev %x\n", ret);

return -1;

}

// 1-3.bind dev/pipe

stBindPipe.u32Num = 1;

stBindPipe.PipeId[0] = pipeId;

ret = RK_MPI_VI_SetDevBindPipe(devId, &stBindPipe);

if (ret != RK_SUCCESS) {

printf("RK_MPI_VI_SetDevBindPipe %x\n", ret);

return -1;

}

} else {

printf("RK_MPI_VI_EnableDev already\n");

}

return 0;

}

int vi_chn_init(int channelId, int width, int height) {

int ret;

int buf_cnt = 2;

// VI init

VI_CHN_ATTR_S vi_chn_attr;

memset(&vi_chn_attr, 0, sizeof(vi_chn_attr));

vi_chn_attr.stIspOpt.u32BufCount = buf_cnt;

vi_chn_attr.stIspOpt.enMemoryType = VI_V4L2_MEMORY_TYPE_DMABUF; // VI_V4L2_MEMORY_TYPE_MMAP;

vi_chn_attr.stSize.u32Width = width;

vi_chn_attr.stSize.u32Height = height;

vi_chn_attr.enPixelFormat = RK_FMT_YUV420SP;

vi_chn_attr.enCompressMode = COMPRESS_MODE_NONE; // COMPRESS_AFBC_16x16;

vi_chn_attr.u32Depth = 2; //0, get fail, 1 - u32BufCount, can get, if bind to other device, must be < u32BufCount

ret = RK_MPI_VI_SetChnAttr(0, channelId, &vi_chn_attr);

ret |= RK_MPI_VI_EnableChn(0, channelId);

if (ret) {

printf("ERROR: create VI error! ret=%d\n", ret);

return ret;

}

return ret;

}

int venc_init(int chnId, int width, int height, RK_CODEC_ID_E enType) {

printf("%s\n",__func__);

VENC_RECV_PIC_PARAM_S stRecvParam;

VENC_CHN_ATTR_S stAttr;

memset(&stAttr, 0, sizeof(VENC_CHN_ATTR_S));

if (enType == RK_VIDEO_ID_AVC) {

stAttr.stRcAttr.enRcMode = VENC_RC_MODE_H264CBR;

stAttr.stRcAttr.stH264Cbr.u32BitRate = 10 * 1024;

stAttr.stRcAttr.stH264Cbr.u32Gop = 1;

} else if (enType == RK_VIDEO_ID_HEVC) {

stAttr.stRcAttr.enRcMode = VENC_RC_MODE_H265CBR;

stAttr.stRcAttr.stH265Cbr.u32BitRate = 10 * 1024;

stAttr.stRcAttr.stH265Cbr.u32Gop = 60;

} else if (enType == RK_VIDEO_ID_MJPEG) {

stAttr.stRcAttr.enRcMode = VENC_RC_MODE_MJPEGCBR;

stAttr.stRcAttr.stMjpegCbr.u32BitRate = 10 * 1024;

}

stAttr.stVencAttr.enType = enType;

stAttr.stVencAttr.enPixelFormat = RK_FMT_RGB888;

if (enType == RK_VIDEO_ID_AVC)

stAttr.stVencAttr.u32Profile = H264E_PROFILE_HIGH;

stAttr.stVencAttr.u32PicWidth = width;

stAttr.stVencAttr.u32PicHeight = height;

stAttr.stVencAttr.u32VirWidth = width;

stAttr.stVencAttr.u32VirHeight = height;

stAttr.stVencAttr.u32StreamBufCnt = 2;

stAttr.stVencAttr.u32BufSize = width * height * 3 / 2;

stAttr.stVencAttr.enMirror = MIRROR_NONE;

RK_MPI_VENC_CreateChn(chnId, &stAttr);

memset(&stRecvParam, 0, sizeof(VENC_RECV_PIC_PARAM_S));

stRecvParam.s32RecvPicNum = -1;

RK_MPI_VENC_StartRecvFrame(chnId, &stRecvParam);

return 0;

}